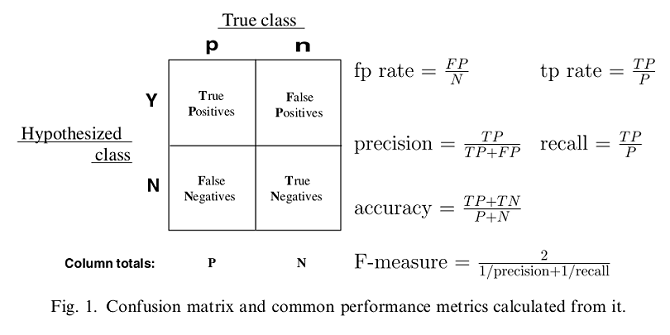

How to judge a binary classifier’s performance?

Precision,Recall,F-score:

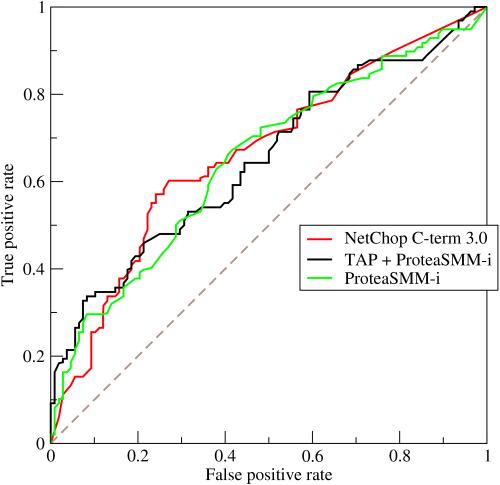

ROC,AUC

ROC(Receiver Operating Characteristic)

x-coordinate:EPR(false positive rate)

y-coordinate:TPR(true positive rate)

- Why use ROC?

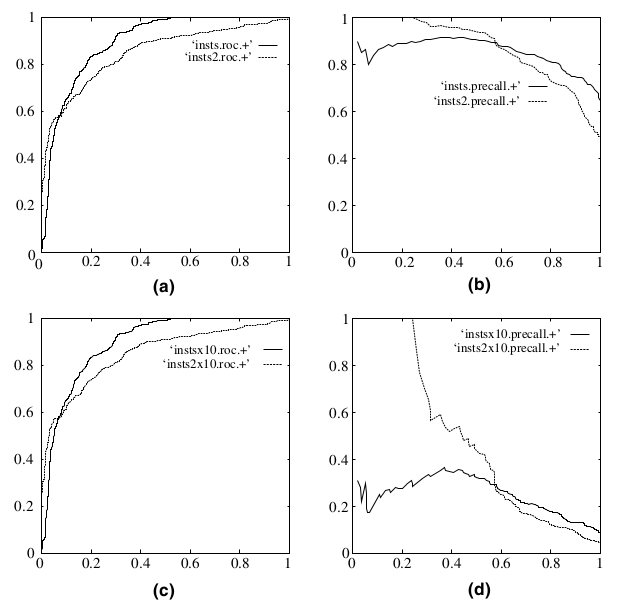

ROC curves have an attractive property: they are insensitive to changes in class distribution. If the proportion of positive to negative instances changes in a test set, the ROC curves will not change.

ROC and precision-recall curves under class skew.

(a) ROC curves, 1:1; (b) precision-recall curves, 1:1; (c) ROC curves, 1:10 and (d) precision-recall curves, 1:10.AUC(Area Under Curve)

- Definition:The area under the ROC curve.

- Meaning:The AUC value is equivalent to the probability that a randomly chosen positive example is ranked higher than a randomly chosen negative example.

- Notice: Because random guessing produces the diagonal line between (0, 0) and (1, 1), which has an area of 0.5, no realistic classifier should have an AUC less than 0.5.

Reference

An introduction to ROC analysis-Tom Fawcett;